Advanced Pose Estimation Techniques

Exploring state-of-the-art methods for human pose estimation and tracking

Advanced Pose Estimation Techniques

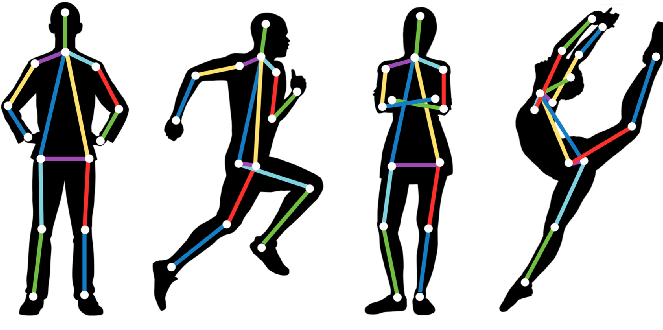

Pose estimation stands as one of the most fascinating advancements in the field of computer vision. It bridges the gap between visual understanding and motion interpretation, allowing machines to interpret human gestures, movements, and interactions in real time. From fitness apps that correct your squats to complex systems analyzing athletes’ biomechanics, pose estimation has reshaped how we perceive and analyze human movement.

This blog dives deep into the evolution, methodologies, and future directions of pose estimation — exploring both theoretical foundations and practical applications.

Evolution of Pose Estimation

Traditional Methods

In the early days, before deep learning transformed computer vision, pose estimation primarily relied on hand-crafted features, template matching, and classical machine learning models. Techniques such as HOG (Histogram of Oriented Gradients), SIFT (Scale-Invariant Feature Transform), and PCA (Principal Component Analysis) were widely used to represent body parts. These methods often combined with graphical models like Pictorial Structures to represent relationships between joints.

However, these approaches had significant limitations:

- They were sensitive to occlusion, lighting changes, and background clutter.

- They struggled to handle complex or dynamic poses.

- Real-time performance was nearly impossible due to computational inefficiency.

While traditional methods laid the foundation, they could not generalize across the vast variability in human appearance and motion — a gap that deep learning would soon bridge.

Deep Learning Era

The introduction of Convolutional Neural Networks (CNNs) revolutionized pose estimation. With CNNs, models could learn hierarchical features directly from images, eliminating the need for manual feature engineering.

One of the earliest breakthroughs came with DeepPose (by Google, 2014), which directly regressed keypoint coordinates from image pixels. This marked a paradigm shift — pose estimation moved from handcrafted heuristics to end-to-end trainable systems.

Soon after, architectures like Stacked Hourglass Networks, OpenPose, and HRNet improved accuracy dramatically. These networks leveraged deep feature maps, multi-scale context, and structural reasoning to detect human joints even in crowded or partially visible scenes.

Today, deep learning–based pose estimation is the backbone of many advanced systems used in sports, healthcare, robotics, AR/VR, and entertainment.

State-of-the-Art Approaches

OpenPose

OpenPose, developed by Carnegie Mellon University’s Perceptual Computing Lab, was the first open-source multi-person pose estimation framework.

Its key innovation lies in Part Affinity Fields (PAFs) — vector fields that represent the direction and association between keypoints. This allows the system to identify multiple people in a single frame and correctly link body parts to individuals, even in overlapping scenarios.

OpenPose remains a gold standard in pose estimation research, providing both accuracy and flexibility, and serving as a foundational model for many subsequent frameworks.

MediaPipe

Google’s MediaPipe framework brought pose estimation to the masses by emphasizing efficiency and deployability.

It provides lightweight, real-time pipelines for body, hand, and face tracking that can run on mobile devices and browsers.

MediaPipe’s BlazePose model is particularly notable. It achieves impressive accuracy with low latency by using a two-step pipeline:

- Detecting the region of interest (the person).

- Estimating precise body keypoints using a compact regression model.

This makes it perfect for fitness tracking apps, AR filters, and real-time web applications, where speed and responsiveness are critical.

Advanced Techniques

The state of the art continues to evolve through several cutting-edge innovations:

Graph Neural Networks (GNNs)

Human body joints naturally form a graph structure, where edges represent spatial or temporal relationships.

By modeling these dependencies using GNNs, pose estimation systems can reason more effectively about the relative motion and configuration of joints, improving robustness in challenging conditions.

Temporal Modeling

Most real-world applications involve videos, not static images.

Temporal models — including LSTMs, Transformers, and 3D CNNs — leverage motion continuity across frames to enhance accuracy and stabilize predictions. They are especially powerful for action recognition, sports analytics, and motion capture.

Multi-task Learning

Modern architectures increasingly combine pose estimation with related tasks such as segmentation, depth estimation, or action recognition.

By learning shared representations, these models generalize better and can infer richer contextual information.

Implementation Tips

Data Preparation

Data quality is everything in pose estimation.

A diverse dataset should include:

- Varied poses — standing, sitting, jumping, etc.

- Different lighting and camera angles

- Multiple environments and clothing types

- Varied body shapes and sizes

For supervised learning, annotation quality is crucial. Tools like COCO Annotator or LabelMe can streamline dataset creation.

Model Selection

The “best” model depends entirely on your project requirements:

| Goal | Recommended Model | Description |

|---|---|---|

| High Accuracy | HRNet, ViTPose | Excellent precision, ideal for research or professional analytics |

| High Speed | MobileNet, BlazePose | Optimized for real-time inference on mobile/embedded devices |

| Balanced Trade-off | EfficientPose, PoseResNet | Moderate compute cost, good for production-scale systems |

Optimization Strategies

Improving model performance often comes down to fine-tuning and optimization. Here are some best practices:

-

Data Augmentation

Use random rotations, scaling, flipping, and lighting changes to make the model robust. -

Hard Negative Mining

Focus training on challenging samples where the model struggles — this helps refine decision boundaries. -

Loss Functions

Use Mean Squared Error (MSE) for keypoint regression or OKS-based losses (Object Keypoint Similarity) for more sophisticated accuracy metrics. -

Quantization and Pruning

Compress models for deployment on low-power devices without significant loss of accuracy.

Real-World Applications

Sports Analytics

Pose estimation provides precise biomechanical insights — tracking athletes’ movements, optimizing techniques, and preventing injuries.

In sports like boxing, football, and athletics, it enables motion tracking, form evaluation, and tactical analysis.

Fitness Tracking

Smart fitness systems use pose estimation to analyze exercise form, detect errors, and give real-time corrective feedback.

With wearable sensors and cameras, users can receive live coaching without human trainers.

Healthcare

In physical therapy and rehabilitation, pose estimation can monitor patient movements and assess recovery progress.

It allows clinicians to remotely track mobility and ensure correct exercise execution, greatly improving accessibility to care.

Animation and AR/VR

Pose data drives realistic character animation in games, movies, and metaverse environments.

It also powers immersive AR experiences by mapping users’ real-world movements into virtual avatars.

Challenges and Solutions

| Challenge | Description | Solution |

|---|---|---|

| Occlusion | Body parts hidden behind others or objects | Use temporal models and context-aware keypoint prediction |

| Complex Poses | Non-standard or acrobatic movements | Augment datasets with synthetic and 3D-generated data |

| Real-Time Performance | High latency in edge deployment | Apply model quantization, pruning, and distillation |

| Multi-Person Scenes | Overlapping individuals confuse keypoint association | Utilize Part Affinity Fields or Transformer-based grouping |

Future Directions

Pose estimation research continues to push boundaries. The next frontier lies in combining modalities and enhancing spatial understanding:

-

3D Pose Estimation from 2D Inputs

Using monocular images to predict 3D joint coordinates is a key focus area. Models like VideoPose3D and VoxelPose are leading this space. -

Multi-Modal Fusion

Integrating RGB, depth, infrared, and even IMU sensor data allows richer and more accurate human pose understanding. -

Foundation Models for Motion Understanding

Emerging models trained on large-scale motion datasets aim to generalize across domains — similar to how CLIP transformed image-text understanding. -

Edge Deployment and Privacy-Preserving AI

Lightweight models capable of running on edge devices will enable on-device analytics, ensuring user privacy and reducing reliance on cloud computation.

Conclusion

Pose estimation has come a long way — from manually engineered keypoints to sophisticated neural systems capable of understanding human movement at near-human precision.

As models become more accurate, efficient, and context-aware, we can expect even broader integration across industries — from sports performance optimization to virtual reality, healthcare, and robotics.

For researchers, developers, and enthusiasts, the best way to stay ahead is to experiment continually. Explore new architectures, train on diverse datasets, and test on real-world data.

The future of pose estimation lies not only in perfecting accuracy but in enabling machines to understand the language of human motion.

Written by Rohan Mainali — Researcher, Developer, and AI Enthusiast.